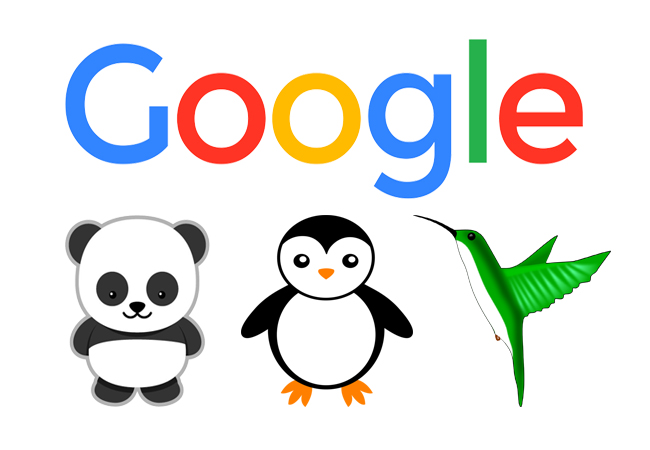

What the Google Animals Taught Us in the Last Two Years

I don’t know about you, but isn’t it a wonder that Google’s most popular updates are named after animals? Maybe they’ve become animal lovers, or just too submerged in changing algorithms that they raffle off the names—you pick. Either way, admit it or not, it made a huge impact in the Search Engine Optimization (SEO) industry and websites that were doing black hat practices were sandboxed by the mighty Google.

Minor algorithm updates happen almost every day, but the most prominent ones aren’t actually announced until an expert start noticing significant changes in the system altogether. Although Google still always proclaims that they tweak the algorithm every now and then, often, they just give us bits and pieces. There are two advantages to these—we are required to constantly change the contents of our websites for the better and to avoid doing spammy or “illegal” search optimization strategies.

In the last two years, there’s an inside joke amongst SEO experts that you should dodge black and white animals (until the recent Hummingbird update, this joke might have stayed longer) because it’s not good for your website’s health. But admittedly, it changed our SEO approaches, right? But let’s go back to memory lane and take a look what these animals taught us.

Panda Update

According to Google, it affected up to 12% of search results. More so from moz.com, Panda targeted thin content, sites with soaring ad-to-content ratios, content farms and other quality criteria. It rolled out on February 23, 2011 and until today, it still has other variations.

Rand Fishkin, a respected authority in all things SEO, made an analysis of who are the winners and losers of the Panda and posted these factors why Google created the Panda:

• Intrusive advertisements on websites; he claimed that sites with lesser “blocks of advertisements” are winners.

• “Ugly” websites; sites with a modern, engaging, attractive and user-friendly designs is favoured by Google.

• User generated content (UGC) sites with thin contents; whereas sites with a more original, non-paid and un-SEO intended content won.

• Pages with less useful and readable content; in terms of content, sites with rich, useful and interactive content won over the former.

Lessons Learned

This update is content-focused so websites with thin, uninformative and obvious-for-SEO-only articles were greatly impacted. The most important lesson that we learned from the Panda is to produce articles and contents that satisfies the user’s questions.

After it rolled out, websites crammed to rid itself of bad contents. More than these, content farms that tolerated low quality article contributions suffered huge so they were obligated to be more selective of the content that will be posted on their site.

Kevin Sanders, a fitness blogger, reiterates these lessons that he learned from the Panda update:

• Never be dependent on just one source of traffic

• Blog to express, not to impress

• Ask help from others

• Always be updated with SEO trends and updates

While this update is content-focused, Marcus Tober of Searchmetrics added that “pages that are genuinely visually attractive to a user, the page will be spared by the Panda update.” He also stated that ranking will be based on user value, as “opposed to just what content is on it.”

Here Comes the Penguin

Just when you thought you got rid of thin contents, here comes another animal update—the Penguin (seriously, what’s the connection of the name to the actual update?). While the Panda is centred on content, on April 24, 2012, the Mighty G announced that the Penguin is focused on a number of spam factors like keyword stuffing and link schemes. Google reported that it affected almost 3.1% of U.S. queries.

More so from Google, this algorithm update was focused at webspam. It decreases a site’s ranking if it violate Google’s quality guidelines. Danny Sullivan expounded on the matter and gave four spam tactics that disobeys the Penguin. He mentioned the following:

• Keyword stuffing—bombarding a webpage with keywords or numbers in an attempt to manipulate the result in search rankings

• Link Scheming—buying or selling of links, too much link exchanges, articles with keyword-rich anchor text links and using automated services that creates links for your site

• Cloaking—giving users and search engines a different content from what is actually there.

• Purposeful duplicate content—simply, same content that is found all around the Internet

Lessons Learned

Google advised webmasters to focus on creating high quality sites that make for a good user experience. It also taught us to only use white hat SEO practices rather than making and getting spammy inbound links. Of course, Google did not give specific guidelines as to how to do it but instead, they encourage to continue making “compelling and amazing websites.”

And since it focused on spammy links, it taught us to remove and be sorry for the bad links that we had. Because of this update, link spamming and very specific keyword anchor linking is discouraged. Instead, SEO practitioners are encouraged to only have good links coming in from authoritative sites; somehow, it encouraged engagement within the online community—this might have been a preview to what Google really wants in the future, eh?

So, just a recap: by now, your site should have good content and no spammy links. What’s next?

Hummingbird Joins the Bandwagon

Come August 20, 2013, Google rolled out another update. This time, they chose a colourful albeit tiny bird—the Hummingbird. Experts compared this to Caffeine (a 2010 update that increased Google’s indexing speed which resulted to a 50% fresher index) but is more focused on the semantics of search queries. In layman’s terms, Hummingbird answers complex queries of users; and if your content does not, then most probably, it will not be on the top of search results. After all, it wasn’t named such if it wasn’t “precise and fast.”

Google hopes that with this latest update, users will get a more precise and appropriate answer to their complex questions. But how complex is “complex”, anyway? Danny Sullivan gives a practical example here. However, you may notice that your PageRank or result in SERPs did not change. This is possible because according to Google, the update is more query-based rather than a major manipulation in the algorithm formula.

Lessons Learned

Now that you have an idea about the Hummingbird, what lessons did we learn from this? Barbara Hollock, a specialist in all things semantic web, lists down four lessons that we should implement to our website to be prepared for any (damaging) effect of Hummingbird in the future.

• Understand that keywords will have “feelings”—websites that are keyword-centre in the past should deviate from being such because in this new algorithm, ranking will be based on the complete question, not just on particular relevant keyword. Address the query of the user instead of just “trying to make sure each page is appropriately categorized.”

• Improve your content—we all know that it is still the king but as much as possible, balance it with information and tone. Think like a user and write for the user. Gone are the days when search engines only look at a particular keyword to list the results; instead, it considers the whole question. Somehow, it thinks on its own.

• Consider your unlinked content—Hallock speculates that Hummingbird is now crawling unlinked content so it’s best to update your robots.txt file.

• Go mobile—Hummingbird enormously considered search queries from mobile devices this year alone so it’s advisable to make your website mobile-friendly and responsive.

Conclusion

Now that we’ve gone through memory lane and adhered to Google’s quality guidelines, the question now is: what will be the next update and how will it affect online marketing? As webmasters, online marketers and users, we can only speculate. But I wouldn’t be surprised if Google will go as far going AI in the next few years based on the updates that they are rolling out. Won’t you agree?